训练用的服务器的芯片一般都是X86架构的,所以可以直接从hub.docker.com上拉个安装了pytorch的镜像下来作为训练用的环境,省去了很多安装步骤,例如拉取pytorch/pytorch:1.2-cuda10.0-cudnn7-devel(注意一般不要使用runtime镜像,runtime镜像里面只安装了最小的支持环境,很多工具都没安装,devel镜像一般是比较全面的): docker pull pytorch/pytorch:1.2-cuda10.0-cudnn7-devel 然后创建容器: 设置对中文字符集的支持: vi ~/.bashrc 重启容器并再次连到容器,然后在容器内安装工具和支持包: apt-get update apt-get install libglib2.0-dev libsm6 libxrender1 libxext6 pip install cython matplotlib opencv-python 安装cocoapi: git clone https://github.com/cocodataset/cocoapi.git 在容器里下载源码(github上有多个EfficientDet的实习代码,最初试用了star最多的https://github.com/toandaominh1997/EfficientDet.Pytorch,但是发现训练时很多时候不能收敛,loss值稀奇古怪的震荡甚至爆炸(非常大的值,大到超10亿以上,夸张吧),感觉代码实现有问题,看了下里面的comments,有人遇到了同样的问题,有人推荐使用signatrix这个稳定的版本,于是改用下面的代码,果然很好,使用d0/d1级别模型训练过程中,loss值稳定下降,没有出现toandaominh1997那个版本的奇怪的震荡和爆炸): git clone https://github.com/signatrix/efficientdet.git 但是使用这个版本后发现它只支持d0/d1级(对应的backbone网络EfficientNet是b0/b1)的模型,对2-7级别不支持,此外对更强悍的adv-efficientnet也不支持,改成其他级别训练时会报错(错误在后面贴出来),而且它每次装载预训练模型总是远程从国外网上加载,会很慢,train.py里的某些参数也不全,使用起来不灵活,例如模型训练中途中断后没有可以resume的机制,为了解决这些比较严重的问题,我花了几天时间琢磨并修改了它的源码,把这些问题逐一解决了,并且使用修改后代码进行了训练,现在非常稳定好用,可以使用b0-b7或adv b0-b8任一级别的backbone模型进行训练,我的代码提交在 https://github.com/arnoldfychen/efficientdet,国内的gitee上有同步了的镜像版本https://gitee.com/arnoldfychen,一般来说使用gitee速度远比github快。 下载源码: git clone https://gitee.com/arnoldfychen/efficientdet.git 继续安装支持包: cd efficientdet pip install -r requirements.txt 将自己的coco数据集和网上下载的预训练模型放到相应位置(../EfficientDet/pretrained_models里存放了从https://github.com/lukemelas/EfficientNet-PyTorch/releases下载的全部预训练模型): ln -s /workspace/CenterNet/data data 下面根据自己的GPU环境和数据集等情况对源码做修改: 1)可能需要对train.py里下列设置和参数根据实际情况和需要做修改: os.environ[‘CUDA_VISIBLE_DEVICES’]=’3,4,5,6‘ parser.add_argument(“–image_size”, type=int, default=512, help=”The common width and height for all images”) 2) src/config.py里的COCO_CLASSES=[‘fire‘] 3)src/dataset.py里的: def num_classes(self): 4)根据情况修改test_dataset.py: parser.add_argument(“–image_size”, type=int, default=512, help=”The common width and height for all images”) 5)根据情况修改mAP_evaluation.py: if __name__ == ‘__main__’: 然后就可以执行下面的命令进行训练: python -u train.py 训练结束后,执行下面的命令进行测试和mAP值评估(最后一次生成的best模型和latest模型都可以试验一下看哪个效果更好): python -u test_dataset.py python -u mAP_evaluation.py 附: 1. 使用https://github.com/signatrix/efficientdet 这个代码,如果backbone不是efficientnet-b0或efficientnet-b1,会报下面的错误之一: #b2-3 #b4 #b5 #b6 #b7 原因是里面有网络层的out-input之间的shape对不上,使用我修改过的代码:https://gitee.com/arnoldfychen/efficientdet即可。 2.如果发生out of memory 错误,根据你的GPU的内存大小调小batch_size参数。

nvidia-docker run -it –ipc=host –name pytorch1.2-efficientdet -v /home/xsrt/AI/work_pytorch:/workspace -p 8190:8190 pytorch/pytorch:1.2-cuda10.0-cudnn7-devel bash

export LANG=”C.UTF-8″

apt-get install vim

cd cocoapi/PythonAPI

make

python setup.py install –user

cp -r ../EfficientDet/pretrained_models .

parser.add_argument(“–batch_size”, type=int, default=3, help=”The number of images per batch”)

parser.add_argument(“–num_epochs”, type=int, default=300)

parser.add_argument(“–test_interval”, type=int, default=1, help=”Number of epoches between testing phases”)

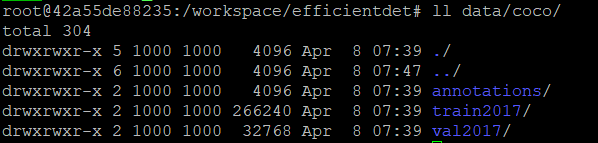

parser.add_argument(“–data_path”, type=str, default=”data/coco“, help=”the root folder of dataset”)

parser.add_argument(“–saved_path”, type=str, default=”trained_models“)

parser.add_argument(“–save_interval”, type=int, default=10, help=”Number of epoches between two operations for saving weights”)

parser.add_argument(‘–backbone_network’, default=’efficientnet-b7‘, type=str,

help=’efficientdet-[b0, b1, ..]’)

parser.add_argument(‘–remote_loading’, default=False, type=bool,

help=’if this option is enabled, it will download and load the backbone weights from https://github.com/lukemelas/EfficientNet-PyTorch/releases,’+

‘otherwise,load the weights locally from ./pretrained_models’)

parser.add_argument(‘–advprop’, default=True, type=bool,

help=’if this option is enabled, the adv_efficientnet_b* backbone will be used instead of efficientnet_b*’)

#return 80

return 1

parser.add_argument(“–data_path”, type=str, default=”data/coco“, help=”the root folder of dataset”)

parser.add_argument(“–cls_threshold”, type=float, default=0.5)

parser.add_argument(“–nms_threshold”, type=float, default=0.5)

parser.add_argument(“–pretrained_model”, type=str, default=”trained_models/fire/signatrix_efficientdet_coco_latest.pth“)

parser.add_argument(“–output”, type=str, default=”predictions“)

efficientdet = torch.load(“trained_models/fire/signatrix_efficientdet_coco_latest.pth“).module

efficientdet.cuda()

dataset_val = CocoDataset(“data/coco”, set=’val2017‘,

transform=transforms.Compose([Normalizer(), Resizer()]))

evaluate_coco(dataset_val, efficientdet)

Caught RuntimeError in replica 0 on device 0.

Original Traceback (most recent call last):

File “/opt/conda/lib/python3.6/site-packages/torch/nn/parallel/parallel_apply.py”, line 60, in _worker

output = module(*input, **kwargs)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py”, line 547, in __call__

result = self.forward(*input, **kwargs)

File “/workspace/efficientdet/src/model.py”, line 259, in forward

p3 = self.conv3(c3)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py”, line 547, in __call__

result = self.forward(*input, **kwargs)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/conv.py”, line 343, in forward

return self.conv2d_forward(input, self.weight)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/conv.py”, line 340, in conv2d_forward

self.padding, self.dilation, self.groups)

RuntimeError: Given groups=1, weight of size 64 40 1 1, expected input[3, 48, 64, 64] to have 40 channels, but got 48 channels instead

RuntimeError: Given groups=1, weight of size 64 40 1 1, expected input[3, 56, 64, 64] to have 40 channels, but got 56 channels instead

RuntimeError: Given groups=1, weight of size 64 40 1 1, expected input[3, 64, 64, 64] to have 40 channels, but got 64 channels instead

RuntimeError: Given groups=1, weight of size 64 40 1 1, expected input[3, 72, 64, 64] to have 40 channels, but got 72 channels instead

RuntimeError: Given groups=1, weight of size 64 40 1 1, expected input[3, 80, 64, 64] to have 40 channels, but got 80 channels instead

本网页所有视频内容由 imoviebox边看边下-网页视频下载, iurlBox网页地址收藏管理器 下载并得到。

ImovieBox网页视频下载器 下载地址: ImovieBox网页视频下载器-最新版本下载

本文章由: imapbox邮箱云存储,邮箱网盘,ImageBox 图片批量下载器,网页图片批量下载专家,网页图片批量下载器,获取到文章图片,imoviebox网页视频批量下载器,下载视频内容,为您提供.

阅读和此文章类似的: 全球云计算

官方软件产品操作指南 (170)

官方软件产品操作指南 (170)