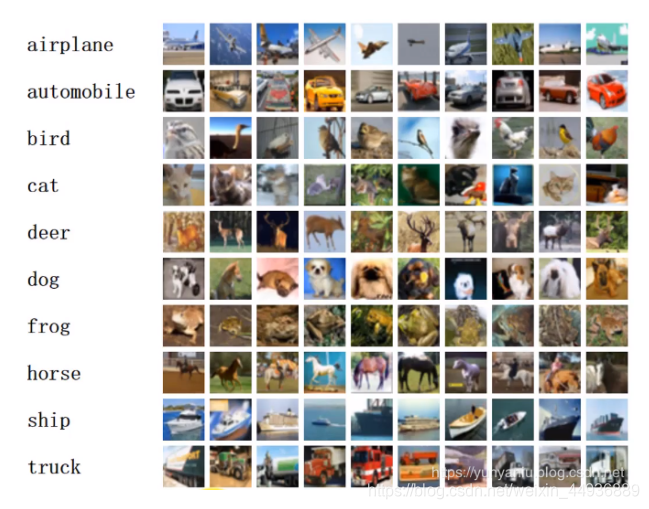

废话不多说啦这次,直接上代码咯 最后大概10分类有98.4%的准确率: cifar-10数据集官网:http://www.cs.toronto.edu/~kriz/cifar.html?usg=alkjrhjqbhw2llxlo8emqns-tbk0at96jq 该数据集是一个 10 分类数据集:飞机( airplane )、汽车( automobile )、鸟类( bird )、猫( cat )、鹿( deer )、狗( dog )、蛙类( frog )、马( horse )、船( ship )和卡车( truck )。 每张图片的尺寸为32 × 32 ,每个类别有6000个图像,数据集中一共有50000 张训练图片和10000 张测试图片(32×32真的是太小了,,,)。 (代码在 Baidu AI Studio 上运行) ! 表示使用命令行命令,这几步就是:创建文件夹+下载数据+移动数据+看一下文件 这里定义了一个有4个残差单元的ResNet,每个残差单元的层数为3,深度分别为:16, 32, 32, 64;残差结构中使用elu函数激活: 这里定义输入和标签的占位符: 这里定义网络输出: 使用交叉熵损失函数: 定义Adam优化器: 这里需要注意的是,我们需要通过fluid.CUDAPlace(0)指定GPU计算: 这里检测之前是否训练并保存过模型,如果保存过就重新加载:

深度学习入门】Paddle实现cifar-10(🐱🐕✈之类)识别详解(基于ResNet)

0. 先看效果:

1. 数据集介绍:

2. 代码:

1. 导入需要的包:

#导入需要的包 import paddle as paddle import paddle.fluid as fluid import numpy as np from PIL import Image import matplotlib.pyplot as plt import os print(os.getcwd()) # 查看工作路径 2. 下载数据:

!mkdir -p /home/aistudio/.cache/paddle/dataset/cifar/ !wget "http://ai-atest.bj.bcebos.com/cifar-10-python.tar.gz" -O cifar-10-python.tar.gz !mv cifar-10-python.tar.gz /home/aistudio/.cache/paddle/dataset/cifar/ !ls -a /home/aistudio/.cache/paddle/dataset/cifar/ 3. 定义数据加载器:

BATCH_SIZE = 64 #用于训练的数据提供器 train_reader = paddle.batch( paddle.reader.shuffle(paddle.dataset.cifar.train10(), buf_size=BATCH_SIZE * 100), batch_size=BATCH_SIZE) #用于测试的数据提供器 test_reader = paddle.batch( paddle.dataset.cifar.test10(), batch_size=BATCH_SIZE) 4. 定义网络结构:

class DistResNet(): def __init__(self, is_train=True): self.is_train = is_train self.weight_decay = 1e-4 def net(self, input, class_dim=10): depth = [3, 3, 3, 3] num_filters = [16, 32, 32, 64] conv = self.conv_bn_layer( input=input, num_filters=16, filter_size=3, act='elu') conv = fluid.layers.pool2d( input=conv, pool_size=3, pool_stride=2, pool_padding=1, pool_type='max') for block in range(len(depth)): for i in range(depth[block]): conv = self.bottleneck_block( input=conv, num_filters=num_filters[block], stride=2 if i == 0 and block != 0 else 1) conv = fluid.layers.batch_norm(input=conv, act='elu') print(conv.shape) pool = fluid.layers.pool2d( input=conv, pool_size=2, pool_type='avg', global_pooling=True) stdv = 1.0 / math.sqrt(pool.shape[1] * 1.0) out = fluid.layers.fc(input=pool, size=class_dim, act="softmax", param_attr=fluid.param_attr.ParamAttr( initializer=fluid.initializer.Uniform(-stdv, stdv), regularizer=fluid.regularizer.L2Decay(self.weight_decay)), bias_attr=fluid.ParamAttr( regularizer=fluid.regularizer.L2Decay(self.weight_decay)) ) return out def conv_bn_layer(self, input, num_filters, filter_size, stride=1, groups=1, act=None, bn_init_value=1.0): conv = fluid.layers.conv2d( input=input, num_filters=num_filters, filter_size=filter_size, stride=stride, padding=(filter_size - 1) // 2, groups=groups, act=None, bias_attr=False, param_attr=fluid.ParamAttr(regularizer=fluid.regularizer.L2Decay(self.weight_decay))) return fluid.layers.batch_norm( input=conv, act=act, is_test=not self.is_train, param_attr=fluid.ParamAttr( initializer=fluid.initializer.Constant(bn_init_value), regularizer=None)) def shortcut(self, input, ch_out, stride): ch_in = input.shape[1] if ch_in != ch_out or stride != 1: return self.conv_bn_layer(input, ch_out, 1, stride) else: return input def bottleneck_block(self, input, num_filters, stride): conv0 = self.conv_bn_layer( input=input, num_filters=num_filters, filter_size=1, act='relu') conv1 = self.conv_bn_layer( input=conv0, num_filters=num_filters, filter_size=3, stride=stride, act='relu') conv2 = self.conv_bn_layer( input=conv1, num_filters=num_filters * 4, filter_size=1, act=None, bn_init_value=0.0) short = self.shortcut(input, num_filters * 4, stride) return fluid.layers.elementwise_add(x=short, y=conv2, act='relu') 5. 定义输入数据

data_shape = [3, 32, 32] images = fluid.layers.data(name='images', shape=data_shape, dtype='float32') label = fluid.layers.data(name='label', shape=[1], dtype='int64') 6. 获取分类器:

import math model = DistResNet() predict = model.net(images) 7. 获取损失函数和准确率:

cost = fluid.layers.cross_entropy(input=predict, label=label) # 交叉熵 avg_cost = fluid.layers.mean(cost) # 计算cost中所有元素的平均值 acc = fluid.layers.accuracy(input=predict, label=label) #使用输入和标签计算准确率 8. 定义优化方法

optimizer =fluid.optimizer.Adam(learning_rate=2e-4) optimizer.minimize(avg_cost) 9. 使用GPU计算:

place = fluid.CUDAPlace(0) exe = fluid.Executor(place) exe.run(fluid.default_startup_program()) feeder = fluid.DataFeeder( feed_list=[images, label],place=place) 10. 定义训练的参数:

iter=0 iters=[] train_costs=[] train_accs=[] def draw_train_process(iters, train_costs, train_accs): title="training costs/training accs" plt.title(title, fontsize=24) plt.xlabel("iter", fontsize=14) plt.ylabel("cost/acc", fontsize=14) plt.plot(iters, train_costs, color='red', label='training costs') plt.plot(iters, train_accs, color='green', label='training accs') plt.legend() plt.grid() plt.show() 11. 加载预训练模型(如果有的话):

EPOCH_NUM = 50 model_save_dir = "/home/aistudio/data/catdog.inference.model" if os.path.exists(model_save_dir): fluid.io.load_params(executor=exe, dirname=model_save_dir, main_program=None) print('reloaded.') 12. 开始训练:

for pass_id in range(EPOCH_NUM): # 开始训练 train_cost = 0 for batch_id, data in enumerate(train_reader()): train_cost,train_acc = exe.run(program=fluid.default_main_program(), feed=feeder.feed(data), fetch_list=[avg_cost, acc]) if batch_id % 100 == 0: # print('Pass:%d, Batch:%d, Cost:%0.5f, Accuracy:%0.5f' % # (pass_id, batch_id, train_cost[0], train_acc[0])) print('Pass:%d, Batch:%d, Cost:%0.5f, Accuracy:%0.5f' % (pass_id, batch_id, np.mean(train_cost), np.mean(train_acc))) iter=iter+BATCH_SIZE iters.append(iter) train_costs.append(np.mean(train_cost)) train_accs.append(np.mean(train_acc)) # 开始测试 test_costs = [] #测试的损失值 test_accs = [] #测试的准确率 for batch_id, data in enumerate(test_reader()): test_cost, test_acc = exe.run(program=fluid.default_main_program(), #运行测试程序 feed=feeder.feed(data), #喂入一个batch的数据 fetch_list=[avg_cost, acc]) #fetch均方误差、准确率 test_costs.append(test_cost[0]) #记录每个batch的误差 test_accs.append(test_acc[0]) #记录每个batch的准确率 test_cost = (sum(test_costs) / len(test_costs)) #计算误差平均值(误差和/误差的个数) test_acc = (sum(test_accs) / len(test_accs)) #计算准确率平均值( 准确率的和/准确率的个数) print('Test:%d, Cost:%0.5f, ACC:%0.5f' % (pass_id, test_cost, test_acc)) #保存模型 if not os.path.exists(model_save_dir): os.makedirs(model_save_dir) fluid.io.save_inference_model(model_save_dir, ['images'], [predict], exe) print('训练模型保存完成!') draw_train_process(iters, train_costs,train_accs)

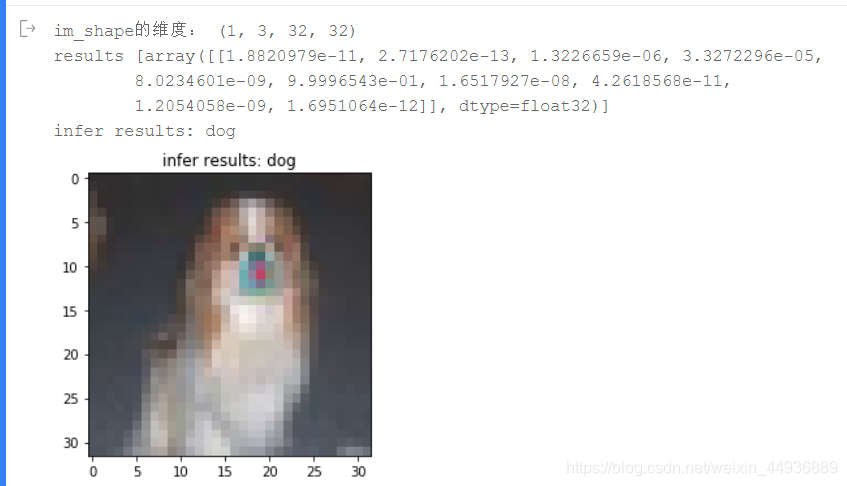

13. 测试结果可视化:

print(os.getcwd()) infer_exe = fluid.Executor(place) inference_scope = fluid.core.Scope() def load_image(file): #打开图片 im = Image.open(file) #将图片调整为跟训练数据一样的大小 32*32, 设定ANTIALIAS,即抗锯齿.resize是缩放 im = im.resize((32, 32), Image.ANTIALIAS) #建立图片矩阵 类型为float32 im = np.array(im).astype(np.float32) #矩阵转置 im = im.transpose((2, 0, 1)) #将像素值从【0-255】转换为【0-1】 im = im / 255.0 #print(im) im = np.expand_dims(im, axis=0) # 保持和之前输入image维度一致 print('im_shape的维度:',im.shape) return im with fluid.scope_guard(inference_scope): #从指定目录中加载 推理model(inference model) [inference_program, # 预测用的program feed_target_names, # 是一个str列表,它包含需要在推理 Program 中提供数据的变量的名称。 fetch_targets] = fluid.io.load_inference_model(model_save_dir,#fetch_targets:是一个 Variable 列表,从中我们可以得到推断结果。 infer_exe) #infer_exe: 运行 inference model的 executor infer_path='/home/aistudio/data/data6430/img-47647-dog.png' img = Image.open(infer_path) img = load_image(infer_path) results = infer_exe.run(inference_program, #运行预测程序 feed={feed_target_names[0]: img}, #喂入要预测的img fetch_list=fetch_targets) #得到推测结果 print('results',results) label_list = [ "airplane", "automobile", "bird", "cat", "deer", "dog", "frog", "horse", "ship", "truck" ] infer_path='/home/aistudio/data/data6430/img-47647-dog.png' img = Image.open(infer_path) print("infer results: %s" % label_list[np.argmax(results[0])]) plt.imshow(img) plt.title("infer results: %s" % label_list[np.argmax(results[0])]) plt.show()

本网页所有视频内容由 imoviebox边看边下-网页视频下载, iurlBox网页地址收藏管理器 下载并得到。

ImovieBox网页视频下载器 下载地址: ImovieBox网页视频下载器-最新版本下载

本文章由: imapbox邮箱云存储,邮箱网盘,ImageBox 图片批量下载器,网页图片批量下载专家,网页图片批量下载器,获取到文章图片,imoviebox网页视频批量下载器,下载视频内容,为您提供.

阅读和此文章类似的: 全球云计算

官方软件产品操作指南 (170)

官方软件产品操作指南 (170)