mdadm是multiple devices admin的简称,它是Linux下的一款标准的软件 RAID 管理工具。 在linux系统中目前以MD(Multiple Devices)虚拟块设备的方式实现软件RAID,利用多个底层的块设备虚拟出一个新的虚拟设备,并且利用条带化(stripping)技术将数据块均匀分布到多个磁盘上来提高虚拟设备的读写性能,利用不同的数据冗祭算法来保护用户数据不会因为某个块设备的故障而完全丢失,而且还能在设备被替换后将丢失的数据恢复到新的设备上。 目前MD支持linear,multipath,raid0(stripping),raid1(mirror),raid4,raid5,raid6,raid10等不同的冗余级别和级成方式,当然也能支持多个RAID陈列的层叠组成raid1 0,raid5 1等类型的陈列。 mdadm -C -v 目录 -l 级别 -n 磁盘数量 设备路径 1.虚拟机磁盘准备 3.安装madam工具 分区格式需改为fd 1.创建RAID 0 2.格式化分区 3.格式化后挂载 1.创建RAID 1 2.查看RAID 1状态 3.格式化并挂载 模拟损坏后查看RAID1阵列详细信息,发现/dev/sdb1自动替换了损坏的/dev/sdd1磁盘。 注意: 要停止阵列,需要先将挂载的RAID先取消挂载才可以停止阵列,并且停止阵列之后会自动删除创建阵列的目录。 1.创建RAID 5 2.查看RAID 5阵列信息 3.模拟磁盘损坏 4.格式化并挂载 5.停止阵列 1.创建RAID 6阵列 2.查看RAID 6阵列信息 3.模拟磁盘损坏(同时损坏两块) 4.格式化并挂载 方法同上。 5.停止阵列 RAID 1+0是用两个RAID 1来创建。 1.创建两个RAID 1阵列 2.查看两个RAID 1阵列信息

Mdadm介绍:

环境介绍:

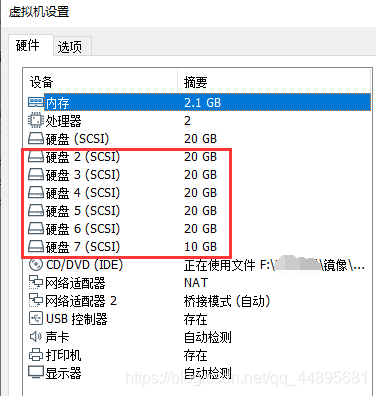

CentOS 7.5-Minimal VMware Workstation 15 mdadm工具 六块磁盘:sdb sdc sdd sde sdf sdg 常用参数:

参数

作用

-a

添加磁盘

-n

指定设备数量

-l

指定RAID级别

-C

创建

-v

显示过程

-f

模拟设备损坏

-r

移除设备

-Q

查看摘要信息

-D

查看详细信息

-S

停止RAID磁盘阵列

-x

指定空闲盘(热备磁盘)个数,空闲盘(热备磁盘)能在工作盘损坏后自动顶替

mdadm工具指令基本格式:

查看RAID阵列方法:

cat /proc/mdstat //查看状态 mdadm -D 目录 //查看详细信息

2.查看新增的磁盘[root@localhost ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 20G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 19G 0 part ├─centos-root 253:0 0 17G 0 lvm / └─centos-swap 253:1 0 2G 0 lvm [SWAP] sdb 8:16 0 20G 0 disk -新 sdc 8:32 0 20G 0 disk -新 sdd 8:48 0 20G 0 disk -新 sde 8:64 0 20G 0 disk -新 sdf 8:80 0 20G 0 disk -新 sdg 8:96 0 10G 0 disk -新 sr0 11:0 1 906M 0 rom [root@localhost ~]# yum -y install mdadm 更改磁盘分区格式:

**************************************** [root@localhost ~]# fdisk /dev/sdb //修改sdb磁盘分区 ... 命令(输入 m 获取帮助):n Partition type: p primary (0 primary, 0 extended, 4 free) e extended Select (default p): Using default response p 分区号 (1-4,默认 1): 起始 扇区 (2048-41943039,默认为 2048): 将使用默认值 2048 Last 扇区, +扇区 or +size{K,M,G} (2048-41943039,默认为 41943039): 将使用默认值 41943039 分区 1 已设置为 Linux 类型,大小设为 20 GiB 命令(输入 m 获取帮助):t 已选择分区 1 Hex 代码(输入 L 列出所有代码):fd 已将分区“Linux raid autodetect”的类型更改为“Linux raid autodetect” 命令(输入 m 获取帮助):p 磁盘 /dev/sdb:21.5 GB, 21474836480 字节,41943040 个扇区 Units = 扇区 of 1 * 512 = 512 bytes 扇区大小(逻辑/物理):512 字节 / 512 字节 I/O 大小(最小/最佳):512 字节 / 512 字节 磁盘标签类型:dos 磁盘标识符:0xf056a1fe 设备 Boot Start End Blocks Id System /dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect 命令(输入 m 获取帮助):w The partition table has been altered! Calling ioctl() to re-read partition table. 正在同步磁盘。 **************************************** [root@localhost ~]# fdisk /dev/sdc //修改sdc磁盘分区 命令(输入 m 获取帮助):p ... 设备 Boot Start End Blocks Id System /dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect **************************************** [root@localhost ~]# fdisk /dev/sdd //修改sdd磁盘分区 命令(输入 m 获取帮助):p ... 设备 Boot Start End Blocks Id System /dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect **************************************** [root@localhost ~]# fdisk /dev/sde //修改sde磁盘分区 命令(输入 m 获取帮助):p ... 设备 Boot Start End Blocks Id System /dev/sde1 2048 41943039 20970496 fd Linux raid autodetect **************************************** [root@localhost ~]# fdisk /dev/sdf //修改sdf磁盘分区 命令(输入 m 获取帮助):p ... 设备 Boot Start End Blocks Id System /dev/sdf1 2048 41943039 20970496 fd Linux raid autodetect **************************************** [root@localhost ~]# fdisk /dev/sdg 命令(输入 m 获取帮助):p ... 设备 Boot Start End Blocks Id System /dev/sdg1 2048 20971519 10484736 fd Linux raid autodetect RAID 0 实验

[root@localhost ~]# mdadm -C -v /dev/md0 -l 0 -n 2 /dev/sdb1 /dev/sdc1 //在/dev/md0目录下将sdb1与sdc1两块磁盘创建为RAID级别为0,磁盘数为2的RAID0阵列 [root@localhost ~]# cat /proc/mdstat //查看RAID 0 Personalities : [raid0] md0 : active raid0 sdc1[1] sdb1[0] 41906176 blocks super 1.2 512k chunks unused devices: <none> [root@localhost ~]# mdadm -D /dev/md0 //查看RAID0详细信息 /dev/md0: Version : 1.2 Creation Time : Tue Apr 21 15:55:29 2020 Raid Level : raid0 Array Size : 41906176 (39.96 GiB 42.91 GB) Raid Devices : 2 Total Devices : 2 Persistence : Superblock is persistent Update Time : Tue Apr 21 15:55:29 2020 State : clean Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Chunk Size : 512K Consistency Policy : none Name : localhost.localdomain:0 (local to host localhost.localdomain) UUID : 4b439b50:63314c34:0fb14c51:c9930745 Events : 0 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 [root@localhost ~]# mkfs.xfs /dev/md0 meta-data=/dev/md0 isize=512 agcount=16, agsize=654720 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=10475520, imaxpct=25 = sunit=128 swidth=256 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=5120, version=2 = sectsz=512 sunit=8 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 [root@localhost ~]# blkid /dev/md0 /dev/md0: UUID="13a0c896-5e79-451f-b6f1-b04b79c1bc40" TYPE="xfs" [root@localhost ~]# mkdir /raid0 //创建挂载目录 [root@localhost ~]# mount /dev/md0 /raid0/ //将/dev/md0挂载到/raid0 [root@localhost ~]# df -h //查看挂载是否成功 文件系统 容量 已用 可用 已用% 挂载点 /dev/mapper/centos-root 17G 11G 6.7G 61% / devtmpfs 1.1G 0 1.1G 0% /dev tmpfs 1.1G 0 1.1G 0% /dev/shm ... /dev/md0 40G 33M 40G 1% /raid0 RAID 1 实验

[root@localhost ~]# mdadm -C -v /dev/md1 -l 1 -n 2 /dev/sdd1 /dev/sde1 -x 1 /dev/sdb1 //在/dev/md1目录下将sdd1与sde1两块磁盘创建为RAID级别为1,磁盘数为2 的RAID1磁盘阵列并将sdd1作为备用磁盘 mdadm: Note: this array has metadata at the start and may not be suitable as a boot device. If you plan to store '/boot' on this device please ensure that your boot-loader understands md/v1.x metadata, or use --metadata=0.90 mdadm: /dev/sdb1 appears to be part of a raid array: level=raid0 devices=2 ctime=Tue Apr 21 15:55:29 2020 mdadm: size set to 20953088K Continue creating array? y mdadm: Fail create md1 when using /sys/module/md_mod/parameters/new_array mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md1 started. [root@localhost ~]# cat /proc/mdstat Personalities : [raid0] [raid1] md1 : active raid1 sdb1[2](S) sde1[1] sdd1[0] 20953088 blocks super 1.2 [2/2] [UU] [==========>..........] resync = 54.4% (11407360/20953088) finish=0.7min speed=200040K/sec unused devices: <none> [root@localhost ~]# mdadm -D /dev/md1 /dev/md1: Version : 1.2 Creation Time : Tue Apr 21 16:11:16 2020 Raid Level : raid1 Array Size : 20953088 (19.98 GiB 21.46 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 2 Total Devices : 3 Persistence : Superblock is persistent Update Time : Tue Apr 21 16:12:34 2020 State : clean, resyncing Active Devices : 2 Working Devices : 3 Failed Devices : 0 Spare Devices : 1 Consistency Policy : resync Resync Status : 77% complete Name : localhost.localdomain:1 (local to host localhost.localdomain) UUID : 98b76e6e:b6390011:26a822a8:3dcc4cc9 Events : 12 Number Major Minor RaidDevice State 0 8 49 0 active sync /dev/sdd1 1 8 65 1 active sync /dev/sde1 2 8 17 - spare /dev/sdb1 [root@localhost ~]# mkfs.xfs /dev/md1 [root@localhost ~]# blkid /dev/md1 /dev/md1: UUID="18a8f33b-1bb6-43c2-8dfc-2b21a871961a" TYPE="xfs" [root@localhost ~]# mkdir /raid1 [root@localhost ~]# mount /dev/md1 /raid1/ [root@localhost ~]# df -h 文件系统 容量 已用 可用 已用% 挂载点 /dev/mapper/centos-root 17G 11G 6.7G 61% / ... /dev/md1 20G 33M 20G 1% /raid1 4.模拟磁盘损坏

[root@localhost ~]# mdadm /dev/md1 -f /dev/sdd1 [root@localhost ~]# mdadm -D /dev/md1 //查看 /dev/md1: Version : 1.2 Creation Time : Tue Apr 21 16:11:16 2020 Raid Level : raid1 Array Size : 20953088 (19.98 GiB 21.46 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 2 Total Devices : 3 Persistence : Superblock is persistent Update Time : Tue Apr 21 16:29:38 2020 State : clean, degraded, recovering //正在自动恢复 Active Devices : 1 Working Devices : 2 Failed Devices : 1 //已损坏的磁盘 Spare Devices : 1 //备用设备数 Consistency Policy : resync Rebuild Status : 46% complete Name : localhost.localdomain:1 (local to host localhost.localdomain) UUID : 98b76e6e:b6390011:26a822a8:3dcc4cc9 Events : 26 Number Major Minor RaidDevice State 2 8 17 0 spare rebuilding /dev/sdb1 1 8 65 1 active sync /dev/sde1 0 8 49 - faulty /dev/sdd1 ****** 备用磁盘正在自动替换损坏的磁盘,等几分钟再查看RAID1阵列详细信息 [root@localhost ~]# mdadm -D /dev/md1 /dev/md1: Version : 1.2 Creation Time : Tue Apr 21 16:11:16 2020 Raid Level : raid1 Array Size : 20953088 (19.98 GiB 21.46 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 2 Total Devices : 3 Persistence : Superblock is persistent Update Time : Tue Apr 21 16:30:39 2020 State : clean //干净,已经替换成功了 Active Devices : 2 Working Devices : 2 Failed Devices : 1 //已损坏的磁盘 Spare Devices : 0 //备用设备数为0了 Consistency Policy : resync Name : localhost.localdomain:1 (local to host localhost.localdomain) UUID : 98b76e6e:b6390011:26a822a8:3dcc4cc9 Events : 36 Number Major Minor RaidDevice State 2 8 17 0 active sync /dev/sdb1 1 8 65 1 active sync /dev/sde1 0 8 49 - faulty /dev/sdd1 5.移除损坏的磁盘

[root@localhost ~]# mdadm -r /dev/md1 /dev/sdd1 mdadm: hot removed /dev/sdd1 from /dev/md1 [root@localhost ~]# mdadm -D /dev/md1 /dev/md1: Version : 1.2 Creation Time : Tue Apr 21 16:11:16 2020 Raid Level : raid1 Array Size : 20953088 (19.98 GiB 21.46 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 2 Total Devices : 2 Persistence : Superblock is persistent Update Time : Tue Apr 21 16:38:32 2020 State : clean Active Devices : 2 Working Devices : 2 Failed Devices : 0 //因为我们已经移除了,所以这里已经没有显示了 Spare Devices : 0 Consistency Policy : resync Name : localhost.localdomain:1 (local to host localhost.localdomain) UUID : 98b76e6e:b6390011:26a822a8:3dcc4cc9 Events : 37 Number Major Minor RaidDevice State 2 8 17 0 active sync /dev/sdb1 1 8 65 1 active sync /dev/sde1 6.添加新磁盘到RAID1阵列:

[root@localhost ~]# mdadm -a /dev/md1 /dev/sdc1 //将/dev/sdc1磁盘添加为RAID1阵列的备用设备 mdadm: added /dev/sdc1 [root@localhost ~]# mdadm -D /dev/md1 /dev/md1: Version : 1.2 Creation Time : Tue Apr 21 16:11:16 2020 Raid Level : raid1 Array Size : 20953088 (19.98 GiB 21.46 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 2 Total Devices : 3 Persistence : Superblock is persistent Update Time : Tue Apr 21 16:40:20 2020 State : clean Active Devices : 2 Working Devices : 3 Failed Devices : 0 Spare Devices : 1 //刚添加了一块新磁盘,备用磁盘这里已经有显示 Consistency Policy : resync Name : localhost.localdomain:1 (local to host localhost.localdomain) UUID : 98b76e6e:b6390011:26a822a8:3dcc4cc9 Events : 38 Number Major Minor RaidDevice State 2 8 17 0 active sync /dev/sdb1 1 8 65 1 active sync /dev/sde1 3 8 33 - spare /dev/sdc1

7.停止RAID阵列

[root@localhost ~]# umount /dev/md1 [root@localhost ~]# df -h 文件系统 容量 已用 可用 已用% 挂载点 /dev/mapper/centos-root 17G 11G 6.7G 61% / devtmpfs 1.1G 0 1.1G 0% /dev tmpfs 1.1G 0 1.1G 0% /dev/shm tmpfs 1.1G 9.7M 1.1G 1% /run tmpfs 1.1G 0 1.1G 0% /sys/fs/cgroup /dev/sda1 1014M 130M 885M 13% /boot overlay 17G 11G 6.7G 61% /var/lib/docker/overlay2/2131dc663296fd193837265e88fa5c9c62b9bfd924303381cea8b4c39c652c84/merged shm 64M 0 64M 0% /var/lib/docker/containers/436f7e6619c1805553ea71d800fd49ab08843cef6ed162acb35b4c32064ea449/mounts/shm tmpfs 211M 0 211M 0% /run/user/0 [root@localhost ~]# mdadm -S /dev/md1 mdadm: stopped /dev/md1 [root@localhost ~]# ls /dev/md1 ls: 无法访问/dev/md1: 没有那个文件或目录 RAID 5 实验

[root@localhost ~]# mdadm -C -v /dev/md5 -l 5 -n 3 /dev/sdb1 /dev/sdc1 /dev/sdd1 -x 1 /dev/sde1 //在/dev/md5目录下将sdb1、sdc1、sdd1三块磁盘创建为RAID级别为5,磁盘 数为3的RAID5磁盘阵列并将sde1作为备用磁盘 mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: chunk size defaults to 512K mdadm: /dev/sdb1 appears to be part of a raid array: level=raid1 devices=2 ctime=Tue Apr 21 16:11:16 2020 mdadm: /dev/sdc1 appears to be part of a raid array: level=raid1 devices=2 ctime=Tue Apr 21 16:11:16 2020 mdadm: /dev/sdd1 appears to be part of a raid array: level=raid1 devices=2 ctime=Tue Apr 21 16:11:16 2020 mdadm: /dev/sde1 appears to be part of a raid array: level=raid1 devices=2 ctime=Tue Apr 21 16:11:16 2020 mdadm: size set to 20953088K Continue creating array? y mdadm: Fail create md5 when using /sys/module/md_mod/parameters/new_array mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md5 started. [root@localhost ~]# cat /proc/mdstat Personalities : [raid0] [raid1] [raid6] [raid5] [raid4] md5 : active raid5 sdd1[4] sde1[3](S) sdc1[1] sdb1[0] 41906176 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU] unused devices: <none> [root@localhost ~]# mdadm -D /dev/md5 /dev/md5: Version : 1.2 Creation Time : Tue Apr 21 16:56:09 2020 Raid Level : raid5 Array Size : 41906176 (39.96 GiB 42.91 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Tue Apr 21 16:59:56 2020 State : clean Active Devices : 3 Working Devices : 4 Failed Devices : 0 Spare Devices : 1 //备用设备数1 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Name : localhost.localdomain:5 (local to host localhost.localdomain) UUID : 422363cb:e7fd4d3a:aaf61344:9bdd00b3 Events : 18 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 4 8 49 2 active sync /dev/sdd1 3 8 65 - spare /dev/sde1 [root@localhost ~]# mdadm -f /dev/md5 /dev/sdb1 mdadm: set /dev/sdb1 faulty in /dev/md5 //提示sdb1已损坏 [root@localhost ~]# mdadm -D /dev/md5 /dev/md5: Version : 1.2 Creation Time : Tue Apr 21 16:56:09 2020 Raid Level : raid5 Array Size : 41906176 (39.96 GiB 42.91 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Tue Apr 21 17:04:36 2020 State : clean, degraded, recovering //正在自动替换 Active Devices : 2 Working Devices : 3 Failed Devices : 1 Spare Devices : 1 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Rebuild Status : 16% complete Name : localhost.localdomain:5 (local to host localhost.localdomain) UUID : 422363cb:e7fd4d3a:aaf61344:9bdd00b3 Events : 22 Number Major Minor RaidDevice State 3 8 65 0 spare rebuilding /dev/sde1 1 8 33 1 active sync /dev/sdc1 4 8 49 2 active sync /dev/sdd1 0 8 17 - faulty /dev/sdb1 ************************** [root@localhost ~]# mdadm -D /dev/md5 /dev/md5: Version : 1.2 Creation Time : Tue Apr 21 16:56:09 2020 Raid Level : raid5 Array Size : 41906176 (39.96 GiB 42.91 GB) Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Tue Apr 21 17:07:58 2020 State : clean //自动替换成功 Active Devices : 3 Working Devices : 3 Failed Devices : 1 //损坏磁盘数为1 Spare Devices : 0 //备用磁盘数为0,因为已经替换 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Name : localhost.localdomain:5 (local to host localhost.localdomain) UUID : 422363cb:e7fd4d3a:aaf61344:9bdd00b3 Events : 37 Number Major Minor RaidDevice State 3 8 65 0 active sync /dev/sde1 1 8 33 1 active sync /dev/sdc1 4 8 49 2 active sync /dev/sdd1 0 8 17 - faulty /dev/sdb1 [root@localhost ~]# mkdir /raid5 [root@localhost ~]# mkfs.xfs /dev/md5 [root@localhost ~]# mount /dev/md5 /raid5/ [root@localhost ~]# df -h 文件系统 容量 已用 可用 已用% 挂载点 /dev/mapper/centos-root 17G 11G 6.7G 61% / ... /dev/md5 40G 33M 40G 1% /raid5 [root@localhost ~]# mdadm -S /dev/md5 mdadm: stopped /dev/md5 RAID 6 实验

[root@localhost ~]# mdadm -C -v /dev/md6 -l 6 -n 4 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1 -x 2 /dev/sdf1 /dev/sdg1 //在/dev/md6目录下将sdb1、sdc1、sdd1、sde1四块磁盘创建为RAID级别 为6,磁盘数为4的RAID6磁盘阵列并将sdf1、sdg1作为备用磁盘 mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: chunk size defaults to 512K mdadm: /dev/sdb1 appears to be part of a raid array: level=raid6 devices=4 ctime=Tue Apr 21 17:37:15 2020 mdadm: partition table exists on /dev/sdb1 but will be lost or meaningless after creating array mdadm: /dev/sdc1 appears to be part of a raid array: level=raid6 devices=4 ctime=Tue Apr 21 17:37:15 2020 mdadm: /dev/sdd1 appears to be part of a raid array: level=raid6 devices=4 ctime=Tue Apr 21 17:37:15 2020 mdadm: /dev/sde1 appears to be part of a raid array: level=raid6 devices=4 ctime=Tue Apr 21 17:37:15 2020 mdadm: /dev/sdf1 appears to be part of a raid array: level=raid6 devices=4 ctime=Tue Apr 21 17:37:15 2020 mdadm: size set to 10467328K mdadm: largest drive (/dev/sdb1) exceeds size (10467328K) by more than 1% Continue creating array? y mdadm: Fail create md6 when using /sys/module/md_mod/parameters/new_array mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md6 started. [root@localhost ~]# cat /proc/mdstat Personalities : [raid6] [raid5] [raid4] md6 : active raid6 sdg1[5](S) sdf1[4](S) sde1[3] sdd1[2] sdc1[1] sdb1[0] 20934656 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU] unused devices: <none> [root@localhost ~]# mdadm -D /dev/md6 /dev/md6: Version : 1.2 Creation Time : Tue Apr 21 17:54:25 2020 Raid Level : raid6 Array Size : 20934656 (19.96 GiB 21.44 GB) Used Dev Size : 10467328 (9.98 GiB 10.72 GB) Raid Devices : 4 Total Devices : 6 Persistence : Superblock is persistent Update Time : Tue Apr 21 17:58:16 2020 State : clean Active Devices : 4 Working Devices : 6 Failed Devices : 0 Spare Devices : 2 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Name : localhost.localdomain:6 (local to host localhost.localdomain) UUID : 9a5c470e:eb95d0b4:2a213dac:f0fd3315 Events : 17 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 4 8 81 - spare /dev/sdf1 5 8 97 - spare /dev/sdg1 [root@localhost ~]# mdadm -f /dev/md6 /dev/sdb1 //sdb1损坏 mdadm: set /dev/sdb1 faulty in /dev/md6 [root@localhost ~]# mdadm -f /dev/md6 /dev/sdc1 //sdc1损坏 mdadm: set /dev/sdc1 faulty in /dev/md6 [root@localhost ~]# mdadm -D /dev/md6 //查看RAID6阵列状态 /dev/md6: Version : 1.2 Creation Time : Tue Apr 21 17:54:25 2020 Raid Level : raid6 Array Size : 20934656 (19.96 GiB 21.44 GB) Used Dev Size : 10467328 (9.98 GiB 10.72 GB) Raid Devices : 4 Total Devices : 6 Persistence : Superblock is persistent Update Time : Tue Apr 21 18:01:46 2020 State : clean, degraded, recovering //正在替换 Active Devices : 2 Working Devices : 4 Failed Devices : 2 //损坏磁盘数2块 Spare Devices : 2 //备用设备数2 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Rebuild Status : 19% complete Name : localhost.localdomain:6 (local to host localhost.localdomain) UUID : 9a5c470e:eb95d0b4:2a213dac:f0fd3315 Events : 29 Number Major Minor RaidDevice State 5 8 97 0 spare rebuilding /dev/sdg1 4 8 81 1 spare rebuilding /dev/sdf1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 0 8 17 - faulty /dev/sdb1 1 8 33 - faulty /dev/sdc1 ***************************** [root@localhost ~]# mdadm -D /dev/md6 /dev/md6: Version : 1.2 Creation Time : Tue Apr 21 17:54:25 2020 Raid Level : raid6 Array Size : 20934656 (19.96 GiB 21.44 GB) Used Dev Size : 10467328 (9.98 GiB 10.72 GB) Raid Devices : 4 Total Devices : 6 Persistence : Superblock is persistent Update Time : Tue Apr 21 18:04:02 2020 State : clean //已自动替换 Active Devices : 4 Working Devices : 4 Failed Devices : 2 Spare Devices : 0 //备用设备为0 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Name : localhost.localdomain:6 (local to host localhost.localdomain) UUID : 9a5c470e:eb95d0b4:2a213dac:f0fd3315 Events : 43 Number Major Minor RaidDevice State 5 8 97 0 active sync /dev/sdg1 4 8 81 1 active sync /dev/sdf1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 0 8 17 - faulty /dev/sdb1 1 8 33 - faulty /dev/sdc1 [root@localhost ~]# mdadm -S /dev/md6 mdadm: stopped /dev/md6 RAID 10 实验

[root@localhost ~]# mdadm -C -v /dev/md1 -l 1 -n 2 /dev/sdb1 /dev/sdc1 mdadm: /dev/sdb1 appears to be part of a raid array: level=raid1 devices=2 ctime=Wed Apr 22 00:47:05 2020 mdadm: partition table exists on /dev/sdb1 but will be lost or meaningless after creating array mdadm: Note: this array has metadata at the start and may not be suitable as a boot device. If you plan to store '/boot' on this device please ensure that your boot-loader understands md/v1.x metadata, or use --metadata=0.90 mdadm: /dev/sdc1 appears to be part of a raid array: level=raid1 devices=2 ctime=Wed Apr 22 00:47:05 2020 mdadm: size set to 20953088K Continue creating array? y mdadm: Fail create md1 when using /sys/module/md_mod/parameters/new_array mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md1 started. [root@localhost ~]# mdadm -C -v /dev/md0 -l 1 -n 2 /dev/sdd1 /dev/sde1 mdadm: /dev/sdd1 appears to be part of a raid array: level=raid6 devices=4 ctime=Tue Apr 21 17:54:25 2020 mdadm: Note: this array has metadata at the start and may not be suitable as a boot device. If you plan to store '/boot' on this device please ensure that your boot-loader understands md/v1.x metadata, or use --metadata=0.90 mdadm: /dev/sde1 appears to be part of a raid array: level=raid6 devices=4 ctime=Tue Apr 21 17:54:25 2020 mdadm: size set to 20953088K Continue creating array? y mdadm: Fail create md0 when using /sys/module/md_mod/parameters/new_array mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md0 started. [root@localhost ~]# mdadm -D /dev/md1 /dev/md1: Version : 1.2 Creation Time : Wed Apr 22 00:48:19 2020 Raid Level : raid1 Array Size : 20953088 (19.98 GiB 21.46 GB) *****第一个RAID 1容量20G***** Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 2 Total Devices : 2 Persistence : Superblock is persistent Update Time : Wed Apr 22 00:50:21 2020 State : clean Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Consistency Policy : resync Name : localhost.localdomain:1 (local to host localhost.localdomain) UUID : 95cd9b90:8dcbbbef:7974f3aa:d38d7f5b Events : 17 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 [root@localhost ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Wed Apr 22 00:48:52 2020 Raid Level : raid1 Array Size : 20953088 (19.98 GiB 21.46 GB) *****第二个RAID 1容量20G****** Used Dev Size : 20953088 (19.98 GiB 21.46 GB) Raid Devices : 2 Total Devices : 2 Persistence : Superblock is persistent Update Time : Wed Apr 22 00:50:44 2020 State : clean, resyncing Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Consistency Policy : resync Resync Status : 96% complete Name : localhost.localdomain:0 (local to host localhost.localdomain) UUID : ae813945:1174d6cb:ad1e3a33:1303a7d3 Events : 15 Number Major Minor RaidDevice State 0 8 49 0 active sync /dev/sdd1 1 8 65 1 active sync /dev/sde1 3.创建RAID 1+0阵列

[root@localhost ~]# mdadm -C -v /dev/md10 -l 0 -n 2 /dev/md1 /dev/md0 mdadm: chunk size defaults to 512K mdadm: Fail create md10 when using /sys/module/md_mod/parameters/new_array mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md10 started. 4.查看RAID 1+0阵列信息

[root@localhost ~]# mdadm -D /dev/md10 /dev/md10: Version : 1.2 Creation Time : Wed Apr 22 00:55:41 2020 Raid Level : raid0 Array Size : 41871360 (39.93 GiB 42.88 GB) *****制作的RAID 1+0 容量40G***** Raid Devices : 2 Total Devices : 2 Persistence : Superblock is persistent Update Time : Wed Apr 22 00:55:41 2020 State : clean Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Chunk Size : 512K Consistency Policy : none Name : localhost.localdomain:10 (local to host localhost.localdomain) UUID : 09a95fcb:c9a2ec94:4461c81e:a9a65c2f Events : 0 Number Major Minor RaidDevice State 0 9 1 0 active sync /dev/md1 1 9 0 1 active sync /dev/md0

本网页所有视频内容由 imoviebox边看边下-网页视频下载, iurlBox网页地址收藏管理器 下载并得到。

ImovieBox网页视频下载器 下载地址: ImovieBox网页视频下载器-最新版本下载

本文章由: imapbox邮箱云存储,邮箱网盘,ImageBox 图片批量下载器,网页图片批量下载专家,网页图片批量下载器,获取到文章图片,imoviebox网页视频批量下载器,下载视频内容,为您提供.

阅读和此文章类似的: 全球云计算

官方软件产品操作指南 (170)

官方软件产品操作指南 (170)