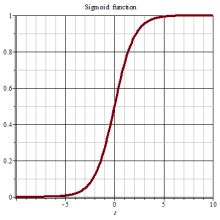

在吴恩达深度学习——深度学习的实用指南中我们说过权重初始化的意义,我们知道初始化每层的权重时要考虑上一层的神经元数,本文我们来通过代码验证一下。 这是吴恩达深度学习第二课的作业,如果你也在学习吴恩达深度学习教程,并且未做作业的话,建议你关闭该网页,根据作业指导自己实现代码先。 先来看看我们的数据集 下面给出 下面是一个简单的三层网络模型代码: 该模型支持三种初始化方法,我们一一来实现。 下面我们来看下不同的权重初始化方法的表现如何。 我们知道不能把神经网络的权重初始化为零,假设第1层的权重初始化为零,在正向传播时,第1层的输入都是相同的值。这意味着反向传播第1层的权重会进行相同的更新,存在对称性问题,也就无法学习了。我们用代码来演示一下: 下面来看下这种初始化方式的表现。 结果如下: 可以看到表现和抛硬币一样,抛硬币选择也能得到50%的准确率,从学习曲线看到根本就没有任何收敛,因此验证了不能将权重初始化为零。 我们画下决策边界: 为了打破对称性,我们把参数随机赋值。 第0层是输入层,不需要权重,所以我们从第1层开始,到第L层。这里 下面运行一下: 输出为: 从学习曲线可以看到是收敛的,虽然是随机初始化了,但由于权重较大,导致出现了梯度爆炸/梯度消失问题,最终得到的表现也不太令人满意。 其实如果去掉 下面还是画一下决策边界: 测试一下: 输出: 可以看到准确率达到了96%,已经达到不错的效果了。 观察隐藏层的激活值的分布,可以获得很多启发。 这里,我们来做一个简单的实验,来看权重初始值是如何影响隐藏层的激活值的分布的。 向一个5 层神经网络(激活函数使用sigmoid 函数)传入随机生成的输入数据,用直方图绘制各层激活值的数据分布。 各层的激活值呈偏向0 和1 的分布。这里使用的sigmoid函数是S型函数,随着输出不断地靠近0(或者靠近1),它的导数的值逐渐接近0。 因此,偏向0 和1 的数据分布会造成反向传播中梯度的值不断变小,最后消失,这就是梯度消失问题。层次加深的深度学习中,梯度消失的问题可能会更加严重。 下面,将权重的标准差设为0.01,再次实现,代码修改如下: 这次集中在0.5 附近的分布。因为不像刚才的例子那样偏向0 和1,所以不会发生梯度消失的问题。但是,激活值的分布有所偏向,说明在表现力上会有很大问题,也就是存在对称性问题。 为什么这么说呢?因为如果有多个神经元都输出几乎相同的值,那它们就没有存在的意义了。比如,如果100 个神经元都输出几乎相同的值,那么也可以由1 个神经元来表达基本相同的事情。因此,激活值在分布上有所偏向会出现“表现力受限”的问题。 各层的激活值的分布都应该有适当的广度。?因为通过在各层间传递多样性的数据,神经网络可以进行高效的学习。反之,如果传递的是有所偏向的数据,就会出现梯度消失或者对称性问题,导致学习可能无法顺利进行。 接着我们尝试Xavier初始值。 因为sigmoid函数和tanh函数左右对称,适合使用Xavier 初始值。但当激活函数使用ReLU时,一般推荐使用He初始值。 现在来看一下激活函数使用ReLU时激活值的分布。我们给出了3 个实,依次是权重初始值为标准差是0.01 的高斯分布时、初始值为Xavier 初始值时、初始值为ReLU专用的He初始值时的结果。 还要修改下画图参数,看的更加清晰: 没看到这个结果之前,我以为He只是Xavier上改变了一个系数,从1改成2而已,原来作用这么大! 从上文我们应该知道:

引言

数据集

import numpy as np import matplotlib.pyplot as plt import sklearn import sklearn.datasets from init_utils import sigmoid, relu, compute_loss, forward_propagation, backward_propagation from init_utils import update_parameters, predict, load_dataset, plot_decision_boundary, predict_dec %matplotlib inline plt.rcParams['figure.figsize'] = (7.0, 4.0) # set default size of plots plt.rcParams['image.interpolation'] = 'nearest' plt.rcParams['image.cmap'] = 'gray' # load image dataset: blue/red dots in circles train_X, train_Y, test_X, test_Y = load_dataset()

这是通过sklearn.datasets.make_circles生成的圆圈分布的数据,显然这是一个非线性问题,我们无法画一条直线来分类这两类。init_utils中的代码,这里只是一个简单的三层网络用语演示。在使用纯Python代码实现深层神经网络中我们已经知道了如何实现任意深层网络。import numpy as np import matplotlib.pyplot as plt import h5py import sklearn import sklearn.datasets def sigmoid(x): """ Compute the sigmoid of x Arguments: x -- A scalar or numpy array of any size. Return: s -- sigmoid(x) """ s = 1/(1+np.exp(-x)) return s def relu(x): """ Compute the relu of x Arguments: x -- A scalar or numpy array of any size. Return: s -- relu(x) """ s = np.maximum(0,x) return s def forward_propagation(X, parameters): """ Implements the forward propagation (and computes the loss) presented in Figure 2. Arguments: X -- input dataset, of shape (input size, number of examples) Y -- true "label" vector (containing 0 if cat, 1 if non-cat) parameters -- python dictionary containing your parameters "W1", "b1", "W2", "b2", "W3", "b3": W1 -- weight matrix of shape () b1 -- bias vector of shape () W2 -- weight matrix of shape () b2 -- bias vector of shape () W3 -- weight matrix of shape () b3 -- bias vector of shape () Returns: loss -- the loss function (vanilla logistic loss) """ # retrieve parameters W1 = parameters["W1"] b1 = parameters["b1"] W2 = parameters["W2"] b2 = parameters["b2"] W3 = parameters["W3"] b3 = parameters["b3"] # LINEAR -> RELU -> LINEAR -> RELU -> LINEAR -> SIGMOID z1 = np.dot(W1, X) + b1 a1 = relu(z1) z2 = np.dot(W2, a1) + b2 a2 = relu(z2) z3 = np.dot(W3, a2) + b3 a3 = sigmoid(z3) cache = (z1, a1, W1, b1, z2, a2, W2, b2, z3, a3, W3, b3) return a3, cache def backward_propagation(X, Y, cache): """ Implement the backward propagation presented in figure 2. Arguments: X -- input dataset, of shape (input size, number of examples) Y -- true "label" vector (containing 0 if cat, 1 if non-cat) cache -- cache output from forward_propagation() Returns: gradients -- A dictionary with the gradients with respect to each parameter, activation and pre-activation variables """ m = X.shape[1] (z1, a1, W1, b1, z2, a2, W2, b2, z3, a3, W3, b3) = cache dz3 = 1./m * (a3 - Y) dW3 = np.dot(dz3, a2.T) db3 = np.sum(dz3, axis=1, keepdims = True) da2 = np.dot(W3.T, dz3) dz2 = np.multiply(da2, np.int64(a2 > 0)) dW2 = np.dot(dz2, a1.T) db2 = np.sum(dz2, axis=1, keepdims = True) da1 = np.dot(W2.T, dz2) dz1 = np.multiply(da1, np.int64(a1 > 0)) dW1 = np.dot(dz1, X.T) db1 = np.sum(dz1, axis=1, keepdims = True) gradients = {"dz3": dz3, "dW3": dW3, "db3": db3, "da2": da2, "dz2": dz2, "dW2": dW2, "db2": db2, "da1": da1, "dz1": dz1, "dW1": dW1, "db1": db1} return gradients def update_parameters(parameters, grads, learning_rate): """ Update parameters using gradient descent Arguments: parameters -- python dictionary containing your parameters grads -- python dictionary containing your gradients, output of n_model_backward Returns: parameters -- python dictionary containing your updated parameters parameters['W' + str(i)] = ... parameters['b' + str(i)] = ... """ L = len(parameters) // 2 # number of layers in the neural networks # Update rule for each parameter for k in range(L): parameters["W" + str(k+1)] = parameters["W" + str(k+1)] - learning_rate * grads["dW" + str(k+1)] parameters["b" + str(k+1)] = parameters["b" + str(k+1)] - learning_rate * grads["db" + str(k+1)] return parameters def compute_loss(a3, Y): """ Implement the loss function Arguments: a3 -- post-activation, output of forward propagation Y -- "true" labels vector, same shape as a3 Returns: loss - value of the loss function """ m = Y.shape[1] logprobs = np.multiply(-np.log(a3),Y) + np.multiply(-np.log(1 - a3), 1 - Y) loss = 1./m * np.nansum(logprobs) return loss def load_cat_dataset(): train_dataset = h5py.File('datasets/train_catvnoncat.h5', "r") train_set_x_orig = np.array(train_dataset["train_set_x"][:]) # your train set features train_set_y_orig = np.array(train_dataset["train_set_y"][:]) # your train set labels test_dataset = h5py.File('datasets/test_catvnoncat.h5', "r") test_set_x_orig = np.array(test_dataset["test_set_x"][:]) # your test set features test_set_y_orig = np.array(test_dataset["test_set_y"][:]) # your test set labels classes = np.array(test_dataset["list_classes"][:]) # the list of classes train_set_y = train_set_y_orig.reshape((1, train_set_y_orig.shape[0])) test_set_y = test_set_y_orig.reshape((1, test_set_y_orig.shape[0])) train_set_x_orig = train_set_x_orig.reshape(train_set_x_orig.shape[0], -1).T test_set_x_orig = test_set_x_orig.reshape(test_set_x_orig.shape[0], -1).T train_set_x = train_set_x_orig/255 test_set_x = test_set_x_orig/255 return train_set_x, train_set_y, test_set_x, test_set_y, classes def predict(X, y, parameters): """ This function is used to predict the results of a n-layer neural network. Arguments: X -- data set of examples you would like to label parameters -- parameters of the trained model Returns: p -- predictions for the given dataset X """ m = X.shape[1] p = np.zeros((1,m), dtype = np.int) # Forward propagation a3, caches = forward_propagation(X, parameters) # convert probas to 0/1 predictions for i in range(0, a3.shape[1]): if a3[0,i] > 0.5: p[0,i] = 1 else: p[0,i] = 0 # print results print("Accuracy: " + str(np.mean((p[0,:] == y[0,:])))) return p def plot_decision_boundary(model, X, y): # Set min and max values and give it some padding x_min, x_max = X[0, :].min() - 1, X[0, :].max() + 1 y_min, y_max = X[1, :].min() - 1, X[1, :].max() + 1 h = 0.01 # Generate a grid of points with distance h between them xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h)) # Predict the function value for the whole grid Z = model(np.c_[xx.ravel(), yy.ravel()]) Z = Z.reshape(xx.shape) # Plot the contour and training examples plt.contourf(xx, yy, Z, cmap=plt.cm.Spectral) plt.ylabel('x2') plt.xlabel('x1') plt.scatter(X[0, :], X[1, :], c=np.squeeze(y), cmap=plt.cm.Spectral) plt.show() def predict_dec(parameters, X): """ Used for plotting decision boundary. Arguments: parameters -- python dictionary containing your parameters X -- input data of size (m, K) Returns predictions -- vector of predictions of our model (red: 0 / blue: 1) """ # Predict using forward propagation and a classification threshold of 0.5 a3, cache = forward_propagation(X, parameters) predictions = (a3>0.5) return predictions def load_dataset(): np.random.seed(1) train_X, train_Y = sklearn.datasets.make_circles(n_samples=300, noise=.05) np.random.seed(2) test_X, test_Y = sklearn.datasets.make_circles(n_samples=100, noise=.05) # Visualize the data plt.scatter(train_X[:, 0], train_X[:, 1], c=train_Y, s=40, cmap=plt.cm.Spectral); train_X = train_X.T train_Y = train_Y.reshape((1, train_Y.shape[0])) test_X = test_X.T test_Y = test_Y.reshape((1, test_Y.shape[0])) return train_X, train_Y, test_X, test_Y 神经网络模型

def model(X, Y, learning_rate = 0.01, num_iterations = 15000, print_cost = True, initialization = "he"): """ LINEAR->RELU->LINEAR->RELU->LINEAR->SIGMOID. Arguments: X -- 输入数据 (2, 样本数) Y -- 标签向量(0 红点; 1 蓝点), 形状 (1, 样本数) learning_rate -- 学习率 num_iterations -- 迭代次数 print_cost -- 是否打印损失 initialization -- 初始化方法 ("zeros","random" or "he") Returns: parameters -- 该模型学习好的参数 """ grads = {} costs = [] # to keep track of the loss m = X.shape[1] # 样本数 layers_dims = [X.shape[0], 10, 5, 1] # Initialize parameters dictionary. if initialization == "zeros": parameters = initialize_parameters_zeros(layers_dims) elif initialization == "random": parameters = initialize_parameters_random(layers_dims) elif initialization == "he": parameters = initialize_parameters_he(layers_dims) # Loop (gradient descent) for i in range(0, num_iterations): # 前向传播: LINEAR -> RELU -> LINEAR -> RELU -> LINEAR -> SIGMOID. a3, cache = forward_propagation(X, parameters) # Loss cost = compute_loss(a3, Y) # 反向传播 grads = backward_propagation(X, Y, cache) # 更新参数 parameters = update_parameters(parameters, grads, learning_rate) # 每1000次迭代打印cost if print_cost and i % 1000 == 0: print("Cost after iteration {}: {}".format(i, cost)) costs.append(cost) # 画出学习曲线 plt.plot(costs) plt.ylabel('cost') plt.xlabel('iterations (per hundreds)') plt.title("Learning rate =" + str(learning_rate)) plt.show() return parameters 不同的权重初始化方法

初始化为零

def initialize_parameters_zeros(layers_dims): """ Arguments: layer_dims -- 包括每层大小的列表 Returns: parameters -- 包含参数的字典: "W1", "b1", ..., "WL", "bL": W1 -- 第一层权重矩阵 (layers_dims[1], layers_dims[0]) b1 -- 第一层方差向量 (layers_dims[1], 1) ... WL -- 最后一层权重矩阵 (layers_dims[L], layers_dims[L-1]) bL -- 最后一层方差向量 (layers_dims[L], 1) """ parameters = {} L = len(layers_dims) # number of layers in the network for l in range(1, L): parameters['W' + str(l)] = np.zeros((layers_dims[l],layers_dims[l-1])) parameters['b' + str(l)] = np.zeros((layers_dims[l],1)) return parameters parameters = model(train_X, train_Y, initialization = "zeros") print ("On the train set:") predictions_train = predict(train_X, train_Y, parameters) print ("On the test set:") predictions_test = predict(test_X, test_Y, parameters) Cost after iteration 0: 0.6931471805599453 Cost after iteration 1000: 0.6931471805599453 Cost after iteration 2000: 0.6931471805599453 Cost after iteration 3000: 0.6931471805599453 Cost after iteration 4000: 0.6931471805599453 Cost after iteration 5000: 0.6931471805599453 Cost after iteration 6000: 0.6931471805599453 Cost after iteration 7000: 0.6931471805599453 Cost after iteration 8000: 0.6931471805599453 Cost after iteration 9000: 0.6931471805599453 Cost after iteration 10000: 0.6931471805599455 Cost after iteration 11000: 0.6931471805599453 Cost after iteration 12000: 0.6931471805599453 Cost after iteration 13000: 0.6931471805599453 Cost after iteration 14000: 0.6931471805599453

On the train set: Accuracy: 0.5 On the test set: Accuracy: 0.5 plt.title("Model with Zeros initialization") axes = plt.gca() axes.set_xlim([-1.5,1.5]) axes.set_ylim([-1.5,1.5]) plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y)

不管输入什么,它都预测为0。随机初始化

# GRADED FUNCTION: initialize_parameters_random def initialize_parameters_random(layers_dims): """ Arguments: layer_dims -- 包括每层大小的列表 Returns: parameters """ np.random.seed(3) # 设定随机种子 parameters = {} L = len(layers_dims) # 网络的层数 for l in range(1, L): parameters['W' + str(l)] = np.random.randn(layers_dims[l],layers_dims[l-1]) * 10 parameters['b' + str(l)] = np.zeros((layers_dims[l],1)) return parameters *10让每层都有较大的初始参数值。parameters = model(train_X, train_Y, initialization = "random") print ("On the train set:") predictions_train = predict(train_X, train_Y, parameters) print ("On the test set:") predictions_test = predict(test_X, test_Y, parameters) Cost after iteration 0: inf Cost after iteration 1000: 0.6239567039908781 Cost after iteration 2000: 0.5978043872838292 Cost after iteration 3000: 0.563595830364618 Cost after iteration 4000: 0.5500816882570866 Cost after iteration 5000: 0.5443417928662615 Cost after iteration 6000: 0.5373553777823036 Cost after iteration 7000: 0.4700141958024487 Cost after iteration 8000: 0.3976617665785177 Cost after iteration 9000: 0.39344405717719166 Cost after iteration 10000: 0.39201765232720626 Cost after iteration 11000: 0.38910685278803786 Cost after iteration 12000: 0.38612995897697244 Cost after iteration 13000: 0.3849735792031832 Cost after iteration 14000: 0.38275100578285265

On the train set: Accuracy: 0.83 On the test set: Accuracy: 0.86 * 10就能得到较好的效果,这个简单的三层网路并无法说明随机初始化和下面的HE初始化的区别。

在使用纯Python代码实现深层神经网络中的最后一小节更能体现HE初始化(Xavier初始化)的效果。plt.title("Model with large random initialization") axes = plt.gca() axes.set_xlim([-1.5,1.5]) axes.set_ylim([-1.5,1.5]) plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y)

从决策边界可以看,有很多地方是误分类的。He初始化

# GRADED FUNCTION: initialize_parameters_he def initialize_parameters_he(layers_dims): """ Arguments: layer_dims -- Returns: parameters -- """ np.random.seed(3) parameters = {} L = len(layers_dims) - 1 for l in range(1, L + 1): parameters['W' + str(l)] = np.random.randn(layers_dims[l],layers_dims[l-1]) * np.sqrt(2./layers_dims[l-1]) parameters['b' + str(l)] = np.zeros((layers_dims[l],1)) return parameters parameters = model(train_X, train_Y, initialization = "he") print ("On the train set:") predictions_train = predict(train_X, train_Y, parameters) print ("On the test set:") predictions_test = predict(test_X, test_Y, parameters) Cost after iteration 0: 0.8830537463419761 Cost after iteration 1000: 0.6879825919728063 Cost after iteration 2000: 0.6751286264523371 Cost after iteration 3000: 0.6526117768893807 Cost after iteration 4000: 0.6082958970572938 Cost after iteration 5000: 0.5304944491717495 Cost after iteration 6000: 0.4138645817071794 Cost after iteration 7000: 0.3117803464844441 Cost after iteration 8000: 0.23696215330322562 Cost after iteration 9000: 0.18597287209206836 Cost after iteration 10000: 0.15015556280371817 Cost after iteration 11000: 0.12325079292273552 Cost after iteration 12000: 0.09917746546525932 Cost after iteration 13000: 0.08457055954024274 Cost after iteration 14000: 0.07357895962677362

On the train set: Accuracy: 0.9933333333333333 On the test set: Accuracy: 0.96 plt.title("Model with He initialization") axes = plt.gca() axes.set_xlim([-1.5,1.5]) axes.set_ylim([-1.5,1.5]) plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y)

从决策边界可以看到,此时的决策边界几乎准确的区分了红点和蓝点,获得了不错的效果。从激活值的分布来看权重初始化问题

# coding: utf-8 import numpy as np import matplotlib.pyplot as plt def sigmoid(x): return 1 / (1 + np.exp(-x)) def ReLU(x): return np.maximum(0, x) def tanh(x): return np.tanh(x) input_data = np.random.randn(1000, 100) # 1000个数据 node_num = 100 # 各隐藏层的节点(神经元)数 hidden_layer_size = 5 # 隐藏层有5层 activations = {} # 激活值的结果保存在这里 x = input_data for i in range(hidden_layer_size): if i != 0: x = activations[i-1] # 改变初始值进行实验! w = np.random.randn(node_num, node_num) * 1 # w = np.random.randn(node_num, node_num) * 0.01 # w = np.random.randn(node_num, node_num) * np.sqrt(1.0 / node_num) # w = np.random.randn(node_num, node_num) * np.sqrt(2.0 / node_num) a = np.dot(x, w) # 将激活函数的种类也改变,来进行实验! z = sigmoid(a) # z = ReLU(a) # z = tanh(a) activations[i] = z # 绘制直方图 for i, a in activations.items(): plt.subplot(1, len(activations), i+1) plt.title(str(i+1) + "-layer") if i != 0: plt.yticks([], []) plt.hist(a.flatten(), 30, range=(0,1)) plt.show()

这里假设神经网络有5 层,每层有100 个单元。然后,用高斯分布随机生成1000 个数据作为输入数据,并把它们传给5 层神经网络。

Sigmoid函数在取值0和1附近的导数(梯度)几乎都是0,因此存在下面说的梯度消失问题。# 改变初始值进行实验! #w = np.random.randn(node_num, node_num) * 1 w = np.random.randn(node_num, node_num) * 0.01

使用标准差为0.01 的高斯分布时,各层的激活值的分布如上图所示。# 改变初始值进行实验! # w = np.random.randn(node_num, node_num) * 0.01 w = np.random.randn(node_num, node_num) * np.sqrt(1.0 / node_num)

使用Xavier 初始值后的结果如上图所示。从图像可以看到,越是后面的层,图像变得越歪斜,但是呈现了比之前更有广度的分布。因为各层间传递的数据有适当的广度,所以sigmoid 函数的表现力不受限制,有望进行高效的学习。for i, a in activations.items(): plt.subplot(1, len(activations), i+1) plt.title(str(i+1) + "-layer") if i != 0: plt.yticks([], []) #plt.xlim(0.1, 1) plt.ylim(0, 7000) plt.hist(a.flatten(), 30, range=(0,1)) plt.show()

观察上图可知,当标准差是0.01 的高斯分布时,各层的激活值非常小。神经网络上传递的是非常小的值,说明反向传播时权重的梯度也同样很小。这是很严重的问题,实际上学习基本上没有进展。

接下来是初始值为Xavier 初始值时的结果。在这种情况下,随着层的加深,偏向一点点变大。实际上,层加深后,激活值的偏向变大,学习时会出现梯度消失的问题。

而当初始值为He初始值时,各层中分布的广度相同。由于即便层加深,数据的广度也能保持不变,因此逆向传播时,也会传递合适的值。总结

参考

本网页所有视频内容由 imoviebox边看边下-网页视频下载, iurlBox网页地址收藏管理器 下载并得到。

ImovieBox网页视频下载器 下载地址: ImovieBox网页视频下载器-最新版本下载

本文章由: imapbox邮箱云存储,邮箱网盘,ImageBox 图片批量下载器,网页图片批量下载专家,网页图片批量下载器,获取到文章图片,imoviebox网页视频批量下载器,下载视频内容,为您提供.

阅读和此文章类似的: 全球云计算

官方软件产品操作指南 (170)

官方软件产品操作指南 (170)